At its core, customer service quality assurance (QA) is simply the process of reviewing your team’s conversations with customers to make sure they’re hitting the mark. It’s not about micromanaging or catching people making mistakes. Instead, it’s about creating consistent, high-quality experiences that keep customers coming back and protect the reputation you’ve worked so hard to build.

Why Quality Assurance Is a Non-Negotiable for E-commerce

Think of your customer support team as an extension of your product. You wouldn’t dream of shipping an order without checking it first, right? The same logic applies to every single customer conversation. For any e-commerce brand, each interaction—whether it’s a simple "Where's my order?" query or a complicated return—is a moment that can either win you a customer for life or send them running to a competitor.

This is where customer service QA comes in. It’s the system you create to make sure every single interaction lives up to your brand’s promise. Think of a chef in a Michelin-starred restaurant who tastes every dish before it leaves the kitchen. QA is your head chef, ensuring every support ticket served to a customer is just right.

The True Cost of Inconsistent Support

Without a formal QA process, your support quality becomes a lottery. One customer gets a lightning-fast, empathetic, and perfectly helpful reply. The next gets a slow, copy-pasted response that doesn’t even answer their question. That kind of inconsistency is a silent killer of customer loyalty.

It’s no surprise that 86% of organizations agree that reviewing conversations is a direct path to better customer service. When your support is a roll of the dice, trust disappears, and customers think twice before buying from you again. A single bad experience can completely wipe out all the time and money you spent on marketing to get that customer in the door.

A strong customer service quality assurance program turns your support team from a reactive cost center into a proactive driver of loyalty and revenue. It provides the data needed to coach agents effectively, identify procedural gaps, and refine your overall service strategy.

Building a Foundation for Growth

A well-designed QA program does more than just score conversations; it creates a clear roadmap for what "great" actually looks like. By setting objective standards, you give your agents the tools and confidence they need to succeed. For a growing e-commerce store, this systematic approach pays off in a few key ways:

- Identifies Coaching Opportunities: QA spots the exact areas where agents need a hand, so you can offer specific, helpful coaching instead of vague feedback.

- Enhances Agent Performance: When agents have clear goals and get constructive feedback, they feel more capable and engaged, which boosts morale and reduces turnover.

- Drives Customer Loyalty: Consistently helpful, on-brand interactions build the kind of trust that turns first-time buyers into passionate advocates. You can learn more about how to improve the e-commerce customer experience and see how QA fits into the bigger picture.

- Uncovers Operational Insights: Digging into support tickets often reveals recurring issues with your products, website, or shipping carriers long before they become massive problems.

The Pillars of a Modern E-commerce QA Program

A solid customer service QA program isn’t about random spot-checks or just a gut feeling. It’s a carefully constructed system, with each part supporting the others to create amazing customer experiences, every single time. Think of it like building a high-performance engine for your Shopify store; you can't just throw parts together. Every component needs to be perfectly tuned for the whole thing to run smoothly.

Without this structure, your QA efforts will feel subjective and inconsistent. Agents get frustrated, and your grading won't lead to real improvement. By putting these pillars in place, you create a clear, repeatable process that actually moves the needle.

Defining Your Quality Standard

Before you can measure anything, you have to define what “good” looks like. What is an A+ customer interaction for your brand? This is your quality standard—the north star that guides your entire QA program. It’s so much more than a list of rules; it's a living document that reflects your brand’s personality, voice, and the promise you make to your customers.

For an e-commerce brand, this standard might include things like:

- Accuracy: Was the correct shipping policy or product spec given?

- Brand Tone: Did the agent sound like your brand—friendly, professional, witty?

- Empathy: Did the agent truly connect with a customer’s frustration over a delayed order?

- Solution: Was the problem actually solved on the first try?

This standard becomes the bedrock of your support team. In fact, a foundational pillar of modern QA is the ability to create a knowledge base that drives self-service, which acts as the single source of truth for agents trying to meet these standards consistently.

Creating a Balanced QA Scorecard

Once you know what quality means, you need a tool to measure it. That’s where the QA scorecard comes in. This is the rubric your team will use to grade conversations objectively, turning your quality standard into something tangible. A well-designed scorecard breaks down an interaction into key categories, assigning points based on how well the agent performed in each one.

A balanced scorecard for a Shopify store, for instance, might have sections for procedural things (like correctly tagging tickets), the quality of the solution provided, and of course, communication style. Using a scorecard is the difference between giving vague feedback like "be more helpful" and offering specific, actionable insights.

A great scorecard doesn't just measure what happened; it measures what matters. It directly connects an agent’s actions to the customer's experience and your business goals, making it a powerful tool for coaching and development.

Strategic Conversation Reviews

With hundreds or thousands of tickets flooding in, reviewing every single one is impossible. That’s why strategic conversation reviews are so important. Instead of just picking tickets at random, you need to focus your limited time on the interactions that will give you the most valuable insights.

This means hunting for specific kinds of conversations:

- Complex Issues: Tickets that required a multi-step solution, like a tricky return or a damaged item claim.

- Negative CSAT Scores: Any conversation that ended with a bad customer satisfaction rating should jump to the top of your review list.

- High-Value Orders: You’ll want to pay extra attention to interactions with your most valuable customers.

- Escalated Tickets: When a conversation gets passed up to a manager, it’s a goldmine for identifying potential training gaps.

This targeted approach ensures your review time is spent where it will make the biggest difference for your team’s performance and your customers' happiness.

Team Calibration and The Coaching Loop

These final two pillars are all about consistency and growth. Team calibration is the process of getting all your reviewers on the same page. It’s simple: have multiple people score the exact same ticket, then get together and talk through any differences in their ratings. This crucial step strips away subjectivity and ensures a "10/10" interaction means the same thing to everyone, which builds trust in the whole QA process.

From there, you move right into the coaching loop, which is where all these insights turn into action. The data from your scorecards is used to give targeted, constructive feedback to agents. It’s a continuous cycle: you review conversations, find coaching opportunities, deliver personalized guidance, and then measure the impact in future reviews. This is how QA transforms from a simple audit into a powerful engine for your team's development.

How to Build a High-Impact QA Scorecard

Alright, we’ve covered the big-picture concepts. Now it's time to roll up our sleeves and build the most important tool in your QA toolbox: the QA scorecard.

Think of the scorecard as the heart of your entire quality program. It’s what turns your brand’s abstract ideas about "good service" into something concrete and measurable. It’s the rubric you’ll use to grade conversations, guide your agents, and ultimately, drive real improvement.

A truly effective scorecard doesn't just spit out a number. It creates a detailed performance map for each agent, showing you exactly where they’re knocking it out of the park and which specific habits need a bit of coaching. For an e-commerce brand, this means getting beyond a simple pass/fail grade to something that actually captures the nuances of a great customer interaction.

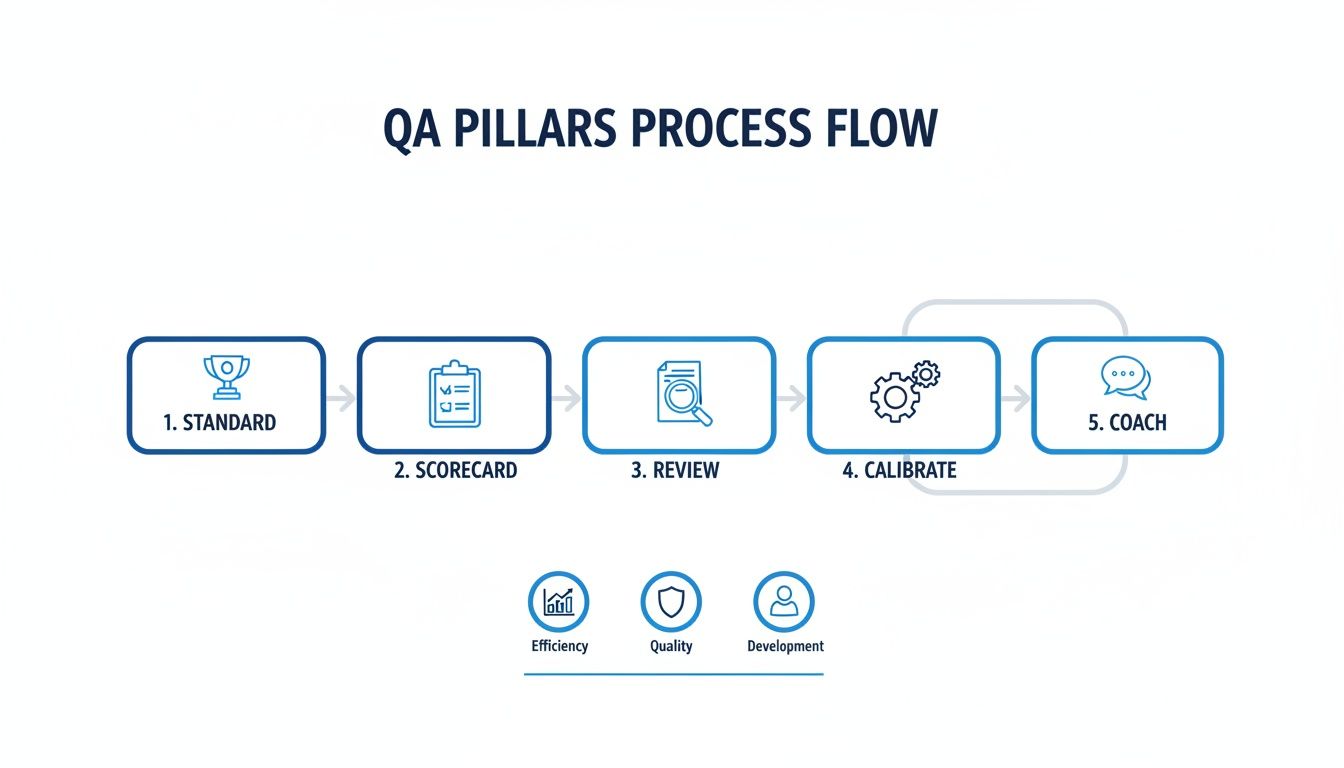

This process flow shows how the scorecard fits into the bigger picture of a healthy QA cycle.

As you can see, crafting the scorecard is a foundational step. Everything else—how you review tickets, how your team gets on the same page during calibrations, and how you coach for growth—flows directly from it.

Identifying Your Core Categories

First things first: you need to decide what you’re actually going to measure. A classic rookie mistake is to build a scorecard with a dozen or more tiny, granular line items. This just makes grading a nightmare and buries any meaningful insights under a pile of data.

Instead, keep it simple. Start with four or five high-level categories that reflect what really matters to your customers and your business.

For a Shopify store handling most of its support over email, these categories are a fantastic place to start:

- Solution Quality: Did the agent actually solve the customer's problem? Was the answer accurate, complete, and relevant to their specific situation?

- Procedural Accuracy: Did the agent follow the internal playbook? This covers everything from tagging tickets correctly and using the right macros to logging refund details properly in Shopify.

- Brand Tone and Empathy: Did the agent sound like a human who works for your brand? More importantly, did they show real empathy and make the customer feel heard?

- Proactive Support: Did the agent go above and beyond? Maybe they anticipated the customer's next question, offered a helpful tip, or spotted a recurring issue and flagged it for the team.

This mix gives you a balanced view, covering both the technical side of the job and the all-important human touch.

Setting Up a Nuanced Scoring System

Once your categories are set, you need to figure out how to score them. A binary Yes/No system is easy, but it misses all the subtlety. An interaction is rarely all good or all bad.

A far better approach is a weighted scoring system. This just means you assign more points to the categories that have a bigger impact on the customer experience.

For instance, getting the solution right is non-negotiable. It’s far more critical than, say, a minor typo in the email. So, you might make "Solution Quality" worth 40 points while "Procedural Accuracy" is worth 20 points. This weighting ensures your final QA score truly reflects what the customer cared about.

You can also use a simple 1-5 scale within each category. This gives your reviewers the flexibility to distinguish between a response that was just okay and one that was truly exceptional.

The goal of a scorecard isn't to punish but to illuminate. A well-weighted system ensures that your quality score is a true reflection of the customer's experience, focusing feedback on what truly matters.

Defining Critical Fails

Let's be honest: some mistakes are way worse than others. A critical fail (sometimes called an "auto-fail") is an error so significant that it immediately results in a score of zero for the entire ticket, no matter how great the rest of the interaction was.

These are the absolute deal-breakers—the mistakes that can cause real harm to a customer or your business.

In an e-commerce setting, common examples of critical fails include:

- Giving out wrong information about your refund or return policy.

- Accidentally sharing another customer's personal information (a major privacy breach).

- Using language that is rude, dismissive, or unprofessional.

Defining these upfront makes your quality standards perfectly clear. It tells your team exactly where the uncrossable lines are and acts as a safety net against major service disasters. As you grow, you'll find that different types of AI customer support software can even help by automatically flagging potential critical fails, which saves your reviewers a ton of time.

Putting these pieces together—clear categories, weighted scoring, and defined critical fails—gives you a powerful blueprint ready to put into action.

To help you get started, here's a simple template you can adapt for your own e-commerce business.

Sample E-commerce QA Scorecard Template

| Category (Weight) | Criteria Example | Scoring Guidance (1-5 Scale) |

|---|---|---|

| Solution Quality (40%) | Provided an accurate and complete resolution to the customer's primary issue in the first response. | 1: Incorrect/incomplete info. 3: Correct but required follow-up. 5: Perfect, first-contact resolution. |

| Procedural Accuracy (20%) | Correctly tagged the ticket, used appropriate macros, and logged order details in Shopify. | 1: Major process errors. 3: Minor errors (e.g., wrong tag). 5: Flawless execution of all internal procedures. |

| Brand Tone & Empathy (25%) | Used brand-aligned language, personalized the response, and showed genuine empathy for the customer's issue. | 1: Robotic/impersonal tone. 3: Friendly but generic. 5: Exceptionally warm, personal, and empathetic. |

| Proactive Support (15%) | Anticipated a follow-up question or offered a relevant tip to enhance the customer's experience. | 1: Missed an obvious opportunity to help. 3: Handled the core issue only. 5: Went above and beyond to add value. |

| Critical Fail (Auto-0) | Shared PII, used profane language, or provided incorrect policy information causing financial impact. | A "Yes" in this category results in an automatic score of 0% for the entire interaction, regardless of other scores. |

This table is just a starting point, of course. Feel free to adjust the categories, criteria, and weights to perfectly match what matters most for your brand and your customers.

Running Calibration and Coaching Sessions That Actually Work

A brilliant QA scorecard is a great start, but honestly, it’s only half the battle. A scorecard is just a tool; its real power comes alive when your team actually uses it. This is where the human side of customer service quality assurance really shines: through calibration and coaching.

Without these two pieces, even the most detailed scorecard leads to inconsistent grading and frustrated agents. Think of it like a recipe for a perfect cake. Your scorecard is the recipe, but calibration is getting all the bakers to agree on what "a pinch of salt" actually means. Coaching is then showing them how to perfectly fold the batter every single time.

Get these right, and QA stops being a top-down audit and becomes a shared system for getting better, together.

Removing Subjectivity with Team Calibration

So, what is calibration? It’s simply the process of getting all your reviewers on the same page. The goal is to strip away the subjectivity so that a "good" ticket is rated as "good" by everyone, every time. This consistency is the bedrock of a trustworthy QA program.

If one reviewer is a tough grader and another is super lenient, agents will feel the process is unfair, and their scores will become meaningless. Calibration is the fix. It builds a shared, concrete understanding of your quality standards.

Running a calibration session is surprisingly straightforward:

- Pick a Ticket: Grab a recent customer interaction that has a bit of complexity to it.

- Review Separately: Have everyone who grades tickets (managers, leads, peer reviewers) score that same ticket on their own. No peeking or discussing yet!

- Share and Explain: Go around the room and have each person reveal their score and, more importantly, walk through their reasoning.

- Debate and Align: This is where the magic happens. Zero in on the ratings that don't match up. Why did one person score empathy as a "4" while another gave a "5"? The point isn't just to agree on a final score, but to hammer out why it's the correct score based on your defined standards.

By dedicating time to calibration, you're not just aligning scores; you're refining your quality standard in real-time. This process ensures your QA program remains fair, objective, and a reliable measure of performance.

Reframing Coaching for Agent Growth

Once you have reliable data from your calibrated reviews, it’s time to coach. And here’s the biggest mistake I see managers make: they treat coaching sessions like a performance review or a disciplinary meeting. When QA is framed as a tool to "catch mistakes," it just creates a culture of fear.

Instead, think of coaching as a collaborative growth session. It's dedicated time to partner with an agent, celebrate what they're doing well, and strategize together on a few specific areas for improvement. This completely changes the dynamic, turning QA into a tool for success, not a reason to be nervous. In fact, 76% of organizations find that a well-run QA process directly boosts customer satisfaction, and great coaching is the engine that makes that happen.

A Framework for Actionable Feedback

Effective coaching all comes down to the feedback. Vague advice like, "You need to show more empathy," is completely useless. To actually help someone improve, feedback needs to be specific, behavioral, and focused on what to do next time.

Here’s a simple framework to make your feedback instantly more actionable:

-

Instead of Vague Feedback: "Your solution wasn't clear."

-

Try Actionable Feedback: "Next time a customer asks about our international shipping rates, let's also include a direct link to the shipping policy page. That way, they get all the details at once and won't have to write back."

-

Instead of Vague Feedback: "You need more empathy."

-

Try Actionable Feedback: "I noticed the customer mentioned they were frustrated with the delay. A great way to connect with them is to acknowledge that feeling right away. Something like, 'I can certainly understand your frustration with the shipping delay; let me look into this for you right away.'"

This approach focuses on future actions, not past errors, giving agents a clear and positive path forward. By running effective calibration and coaching sessions, you build a supportive culture where your entire team sees customer service quality as a shared mission to deliver truly outstanding experiences.

Using AI and Automation to Scale Your QA Efforts

Manually reviewing support tickets is a solid starting point, but let’s be honest: it’s like trying to fill a swimming pool with a teaspoon. It's slow, prone to bias, and simply doesn't scale as your brand grows.

Most e-commerce teams can only get through a tiny fraction of their conversations—maybe 2-3% at best. This leaves a massive blind spot, forcing you to make big decisions based on a small, and often unrepresentative, slice of data.

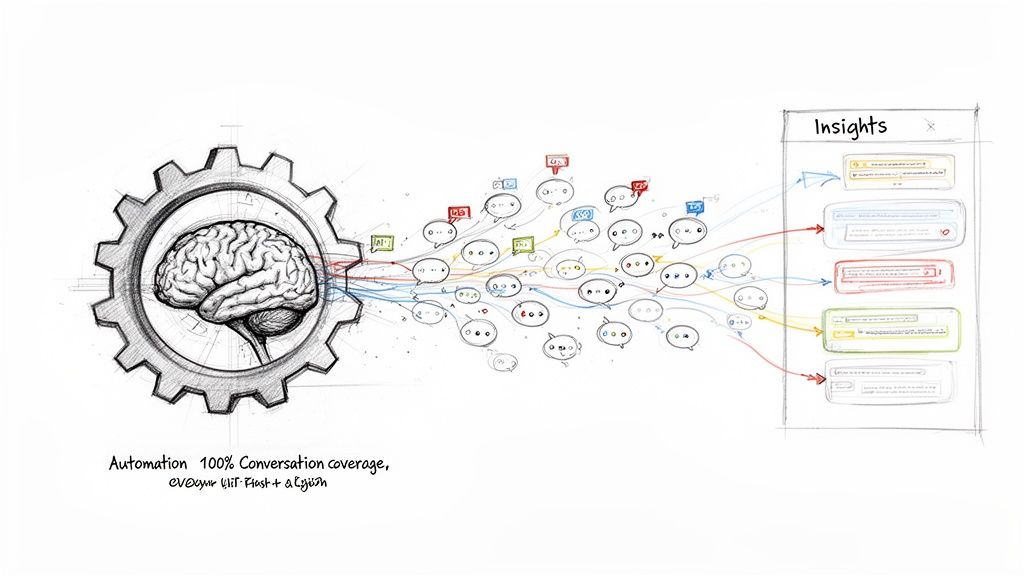

Thankfully, modern technology changes the game entirely. It moves quality assurance from a spot-checking chore into a powerful business intelligence engine. With AI and automation, you can finally stop guessing and start analyzing 100% of your customer interactions. This isn't just about grading more tickets; it’s about uncovering insights you never knew you were missing.

From Manual Spot-Checks to Total Visibility

Imagine a system that can read every single email, understand the customer's mood, and instantly score the interaction against your QA scorecard. That’s exactly what AI-powered platforms do.

This tech gives your QA program a serious upgrade by delivering:

- Automated Scoring: The AI grades conversations based on your exact criteria, freeing up countless hours for your team leads and managers.

- Sentiment Analysis: It can detect subtle shifts in tone—like growing frustration or moments of delight—across thousands of conversations, flagging trends before they turn into major problems.

- Issue Identification: The system automatically tags conversations about emerging product defects, shipping delays with a specific carrier, or confusing checkout instructions.

- Consistent Tracking: AI removes human bias from the grading process. Every agent gets evaluated against the same standard, every single time.

This move toward automation is quickly becoming the norm. A huge 88% of contact centers now use AI solutions for customer service, though only 25% have fully integrated automation into their day-to-day work. For e-commerce managers, tools that auto-classify emails and suggest replies can already deflect up to 70% of routine questions.

AI-driven QA closes the gap between wanting exceptional quality and having the resources to actually achieve it. It turns your support conversations into a rich, searchable database of operational intelligence.

The Practical Impact for Shopify Brands

For an e-commerce brand, this technology is a complete game-changer. An AI platform like MAILO AI doesn't just score tickets; it digs up deep operational insights that can push your business forward, often without needing a dedicated QA department.

It can pinpoint that a new marketing campaign is causing confusion about a discount code, or that a specific product is generating a spike in "damaged on arrival" complaints. These are the kinds of insights you’d almost certainly miss when only reviewing a handful of tickets each week.

This tech gives even small teams the analytical power of a large corporation. For a closer look, check out our guide on how AI is transforming e-commerce strategy.

Platforms that use automation also improve consistency and efficiency. You can explore detailed guides on integrating an AI chatbot for customer support to see how this works in practice. By automating the heavy lifting, you let your team focus on what humans do best: building relationships and solving complex problems.

The E-commerce QA Metrics That Really Move the Needle

So, you're grading support tickets. That's fantastic. But how do you prove your QA program is actually making a difference to the business? To get the real story, you need to look beyond individual ticket scores and focus on a few high-level Key Performance Indicators (KPIs).

These metrics are what connect your team's day-to-day work to the stuff that matters most: customer loyalty and retention. Without them, you're just grading conversations without a clear purpose.

The Big Three: Core Metrics for E-commerce QA

You could track a dozen different things, but for most e-commerce brands, it really boils down to three core metrics. Think of them as a powerful trio that paints a complete picture of your support quality.

- Internal Quality Score (IQS): This is simply the average score your agents get on their QA reviews. It’s your gut check—are we sticking to our own standards? Are we following the playbook we created?

- Customer Satisfaction (CSAT): You’ve seen this one before. It's that classic "How satisfied were you?" survey sent after a conversation. CSAT is your unfiltered, direct line into how customers feel about the support they received.

- First Contact Resolution (FCR): This one measures the percentage of customer issues you solve in the very first interaction. No back-and-forth, no follow-ups. A high FCR is a dead giveaway that your team is not just friendly, but also incredibly efficient and knowledgeable.

These three don't exist in a vacuum; they tell a story together. A perfect IQS score is great, but if your CSAT is tanking, it's a huge red flag. It means your internal standards are completely out of sync with what your customers actually care about.

Connecting the Dots: What Your Metrics Are Telling You

The real magic happens when you start looking at how these numbers influence each other. Let's say your IQS is climbing, which seems great, but your CSAT is stuck in neutral or even dropping. What gives?

This is a classic sign that your QA scorecard is too focused on internal busywork—like whether an agent used the right ticket tag—and not enough on what makes a customer happy, like a quick, kind, and correct solution.

This isn't a rare problem. Forrester's Global Customer Experience Index revealed that a shocking 21% of brands watched their customer experience scores fall, with 25% of US brands dropping for the second year in a row. For an online store, a QA program that ignores customer satisfaction isn't just a missed opportunity; it's a direct threat to your bottom line. You can dig into Forrester's full findings on the state of customer experience to see the data for yourself.

By constantly comparing your internal scores (IQS) with your external feedback (CSAT), you can make smart, data-driven tweaks. This ensures your team is always focused on the things that genuinely make a customer’s day better.

E-commerce QA: Your Questions Answered

Jumping into a quality assurance program for your support team can feel like a big step. Let's tackle some of the most common questions that pop up so you can build a system that genuinely works for your e-commerce brand.

How Many Tickets Should I Review Per Agent Each Week?

When you're just starting out, a good rule of thumb is to review 3-5 conversations per agent, every week. That's the sweet spot. It gives you enough data to find meaningful coaching moments without bogging down your reviewers with a mountain of grading. Consistency is way more important than volume here.

Of course, as your team scales and ticket numbers climb, manually reviewing everything becomes impossible. That's where AI tools come in, giving you 100% coverage automatically. This lets you pivot from the grind of manual grading to focusing on what really matters: strategic coaching.

What Is the Biggest Mistake to Avoid When Starting QA?

The number one mistake I see is creating a super complicated or vague scorecard from day one. If your checklist has 20 items with subjective criteria like "was empathetic," you're setting yourself up for failure. It leads to inconsistent grades, confused reviewers, and agents who just feel frustrated.

Keep it simple. Start with 5-7 core categories that track things you can actually see and measure. Think "Used the correct return policy macro" or "Provided an accurate tracking link." You can always add more complexity later, once you’ve built a solid foundation.

A good QA program isn't about creating a labyrinth of rules. It’s about building a clear, fair, and consistent system that connects what your agents do directly to a better customer experience. When you're new to this, simplicity is your best friend.

How Do I Get My Support Team to Embrace QA?

This is crucial. You have to position QA as a tool for professional development, not a way to punish people. When your agents see it as a system designed to help them get better at their jobs, they'll actually get on board.

Here are a few ways to build that trust:

- Bring them into the process: Ask for your team's feedback when you’re creating the scorecard. It builds a real sense of ownership.

- Make it about coaching: Frame review sessions as collaborative problem-solving, not just pointing out what they did wrong.

- Celebrate the wins: Give a shout-out to agents who are crushing it and show how their awesome work impacts the company's success.

Can We Run a QA Program Without a Dedicated Specialist?

Yes, absolutely! For most growing e-commerce brands, a support manager or a team lead is the perfect person to run the QA process. The key is to block out dedicated time for it on the calendar each week and treat that time as sacred—don't let it get bumped for other "urgent" tasks.

Another route is using an AI platform to handle the scoring and number-crunching for you. This frees up managers to run a super effective customer service quality assurance program by spending their time on high-impact coaching, not tedious manual reviews.

Ready to stop spot-checking and start seeing the full picture? MAILO AI automates your quality assurance, analyzing 100% of your conversations to deliver actionable insights that boost team performance and customer loyalty. Start your free trial with MAILO AI and transform your Shopify support today.